Data Lake

Big data has envolved about 10 years from its born, currently the most popular big data solution is not Hadoop, but Data Lake. It includes data ingestion, data storage, processing&analysis and inference.

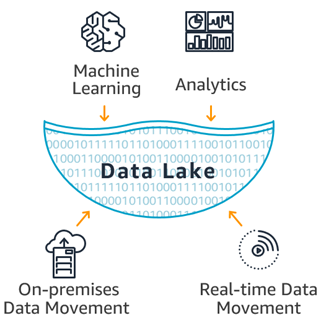

A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. You can store your data as-is, without having to first structure the data, and run different types of analytics—from dashboards and visualizations to big data processing, real-time analytics, and machine learning to guide better decisions.

Before big data, we must have heard decision support system, data warehouse and business intelligence. These tools are mainly for struetured data analysis, such as OLAP workloads. But for big data, not only structure data, but semi-structure and non-structure data, these tool are not that suitable(high cost or limited computation/storage). At the time, Google has lanched their data processing infrastructure -- MapReduce which used for processing log files or web pages. Its great capability and cost-effective have made it become the market darling. Utill now the big data platform based on Hadoop, such as cloudera, MapR are also the most popular on-premise big data solutions.

Actually data lake combine the functionality of DWH and Hadoop. It has some key difference between DWH. Let's explore the difference between data lake and DWH.

A data warehouse is a database optimized to analyze relational data coming from transactional systems and line of business applications. The data structure, and schema are defined in advance to optimize for fast SQL queries, where the results are typically used for operational reporting and analysis. Data is cleaned, enriched, and transformed so it can act as the “single source of truth” that users can trust.

| Characteristics | Data Warehouse | Data Lake |

|---|---|---|

| Data | Relational from transactional systems, operational databases, and line of business applications | Non-relational and relational from IoT devices, web sites, mobile apps, social media, and corporate applications |

| Schema | Designed prior to the DW implementation (schema-on-write) | Written at the time of analysis (schema-on-read) |

| Price/Performance | Fastest query results using higher cost storage | Query results getting faster using low-cost storage |

| Data Quality | Highly curated data that serves as the central version of the truth | Any data that may or may not be curated (ie. raw data) |

| Users | Business analysts | Data scientists, Data developers, and Business analysts (using curated data) |

| Analytics | Batch reporting, BI and visualizations | Machine Learning, Predictive analytics, data discovery and profiling |

Table 1: DWH, Data Lake comparision

Data lake is based on Hadoop and envolved a lot. For Hadoop, it is based on the theory of data locality which made it cost-effective and popular. But now, the hardware price have decreased a lot. We can use the same price to afford a SSD which has very high read disk speed. Due to the data locality, computation and storage are tight coupling which lead the poor scalability. For example, I have encountered a real case which use on-premise Hadoop cluster as the big data platform. For last one year, its computation usage is about 15%. But now the storage usage rate is about 70%, which means they must to scale out their cluster for fulfill their storage requirements. If they scale out the cluster, the cup usage must be even lower. So the key difference between data lake and Hadoop cluster is computation and storage de-coupling. Except that data lake have better scalability, if on cloud the storage and computation would be infinite.